Project Overview

A smart tool for deaf bodied. It converts the sign language hand gestures recognized through deep learning and converts it into a full fledged sentence which is grammatically correct and fully understood by normal person. It recognizes signs that not only depend on spatial but on temporal features as well, which means that some signs starts same but ends differently which changes the entire meaning of the sign.

This project's development was divided in three sub modules:

- i. Face Expression Recognition

- ii. Hand Gesture Recognition

- iii. Sentence Corrector

Face Expression Recognition

Some signs requires expression to convey the meaning. Though I have included it in the project but it was not yet full integrated in the gesture recognition procedure to create sentence. I have planned it for the future.

Hand Gesture Recognition

Hand Gesture Recognition module recognizes the sign through camera or video and converts it into words. It can handle both spatial and temporal features. This modules uses LSTM and Inception CNN. The dataset for this module was custom made by me. The dataset contains 30 classes with control classes. The dataset contains series of videos for each class.

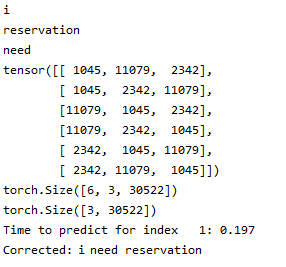

Sentence Corrector

Sometimes the predicted sequence of words in sentence was not correct so this module's job was to correct the sentence